About the Project » History » Revision 11

« Previous |

Revision 11/21

(diff)

| Next »

Ty Hikaru DAULTON, 10/21/2024 01:39 PM

Home | About Us | About the Project | UML Diagrams | Codes | Outcome

About the Project

Our project aims to deliver a final product that combines images from two separate projectors into a single seamless image. These two images are processed using advanced image processing techniques, including gamma correction, alpha blending, and modified intensity, to ensure the desired final appearance. Our team consists of a project manager, a leader, and sub-teams dedicated to doxygen generation, wiki management, coding, commenting, and UML design.

We primarily rely on OpenCV and Python for our image processing.

Key Aspects of the Project:¶

- Image Processing with Python and OpenCV: We use Python in combination with OpenCV, a comprehensive image processing library, to handle complex image analysis and processing tasks efficiently.

- Structured Design with UML: We apply Unified Modeling Language (UML) to create a clear and structured design for our project. UML allows us to visually represent the system's architecture and workflows, making the design easy to understand and follow.

- Thorough Documentation with Doxygen: Our code is meticulously documented using Doxygen, ensuring that it is clear, maintainable, and adaptable for future use.

- Project Management with Redmine: We use Redmine to manage and track project progress, coordinate tasks, and facilitate team collaboration. This tool helps keep the project organized and on schedule.

| Synopsis of Technology |

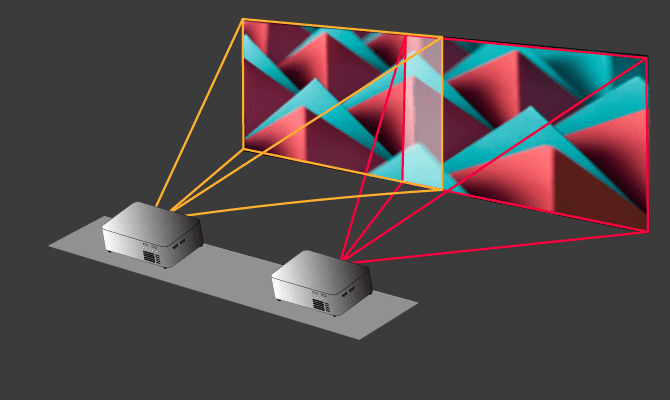

Our project's objective is to produce a single, large, and distinct image on a flat screen using two projectors. The setup involves a flat screen and two laptops, with the projectors directly aimed at the screen. To improve image quality, we utilize techniques such as alpha blending and gamma correction.

Assuming the screen width is 1280mm, the two projectors are placed at a distance referred to as ' d ', which is less than the screen's width. To calculate the size of the overlap area between the images from both projectors, we use the formula ' screen size - d = x '. This formula helps clarify the relationship between the screen size, the distance between the projectors, and the size of the overlapping area.

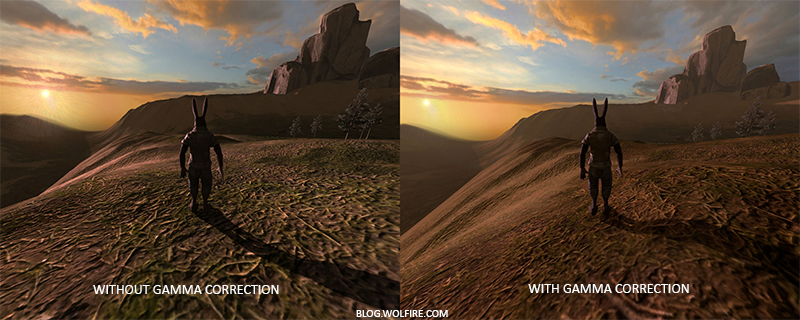

| Gamma Correction Method |

This method applies gamma correction to an image to adjust its luminance. Gamma correction is particularly useful for correcting the brightness of an image or adjusting its contrast. It is a nonlinear adaptation applied to each pixel value. In contrast to linear methods like adding, subtracting, or multiplying, which are applied uniformly across all pixels, gamma correction modifies the saturation of the image using nonlinear techniques. It's important to maintain a stable gamma value, avoiding values that are too small or too large.

gamma_corrected = (image / 255.0)^gamma * 255

Note: gamma is the provided gamma value. The original image is first normalized (divided by 255, since pixel values range from 0 to 255), raised to the power of gamma, and then rescaled to the 0-255 range.

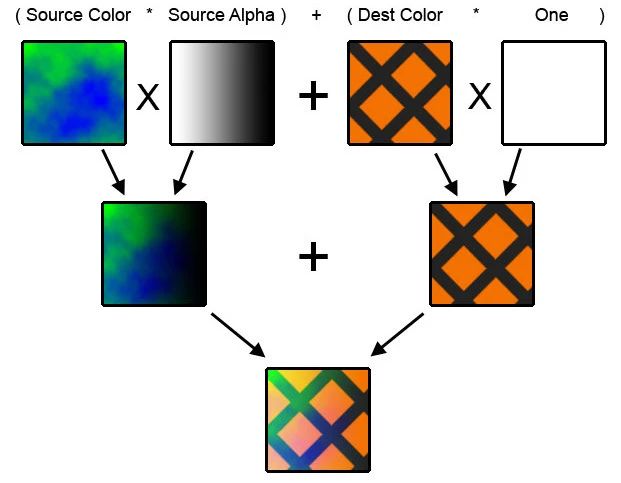

| Alpha Blending Method |

This is a technique employed in computer graphics when layering graphics, where single or multiple objects contain a level of transparency. It ensures that the visible pixels of the graphic underneath a transparent area have their color or brightness adjusted based on the transparency level of the upper object. An alpha channel acts as a mask that controls how much of the lower-lying graphics is displayed.

The method performs alpha blending particularly on the edges of an image. It involves combining an image with a background to create the appearance of partial or full transparency. Typically, this is used to blend two images. The blending behavior depends on the value of image_side:

- If image_side is 1, the method blends the left edge of the image.

- If image_side is 0, it blends the right edge of the image.

Blending occurs by mixing two types of images: the gamma-corrected image and the current result image. This is done using an alpha value that varies along the mask width (mask), resulting in a significant transition between the two images.

| Intensity Modification |

This method modifies the intensity at the edges of an image. Similar to alpha blending, the effect changes based on the value of image_side:

- If image_side is 1, the intensity is gradually reduced towards the left edge.

- If image_side is 0, the intensity is gradually reduced towards the right edge.

The intensity factor is computed to decrease linearly as it approaches the edge of the mask. This creates a fading effect at the edges, where the image gradually fades into the background or into another image.

Updated by Ty Hikaru DAULTON over 1 year ago · 11 revisions