Home

Research

Members

Publications

Contact

Facilities

Home

Research

Members

Publications

Contact

Facilities

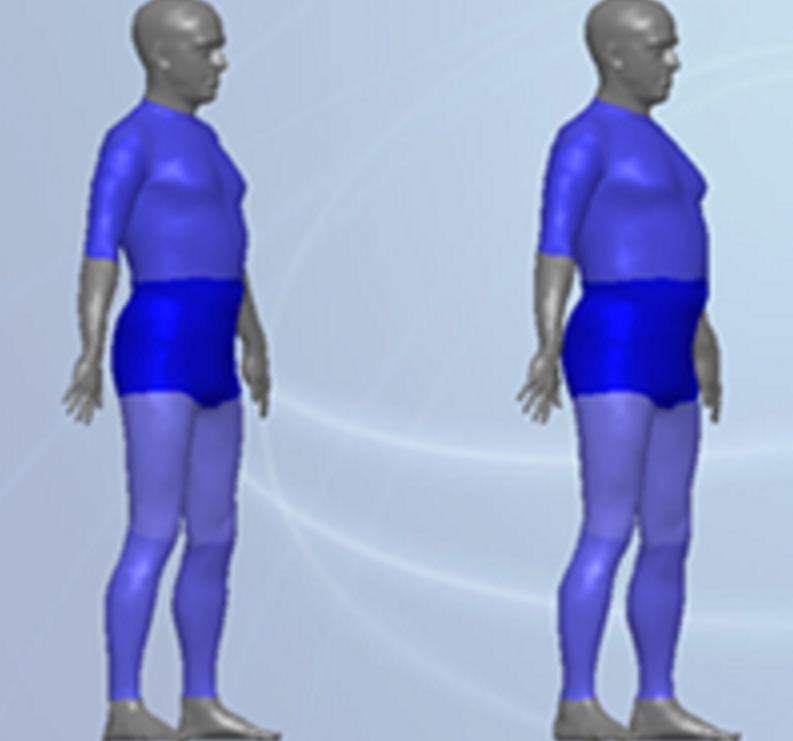

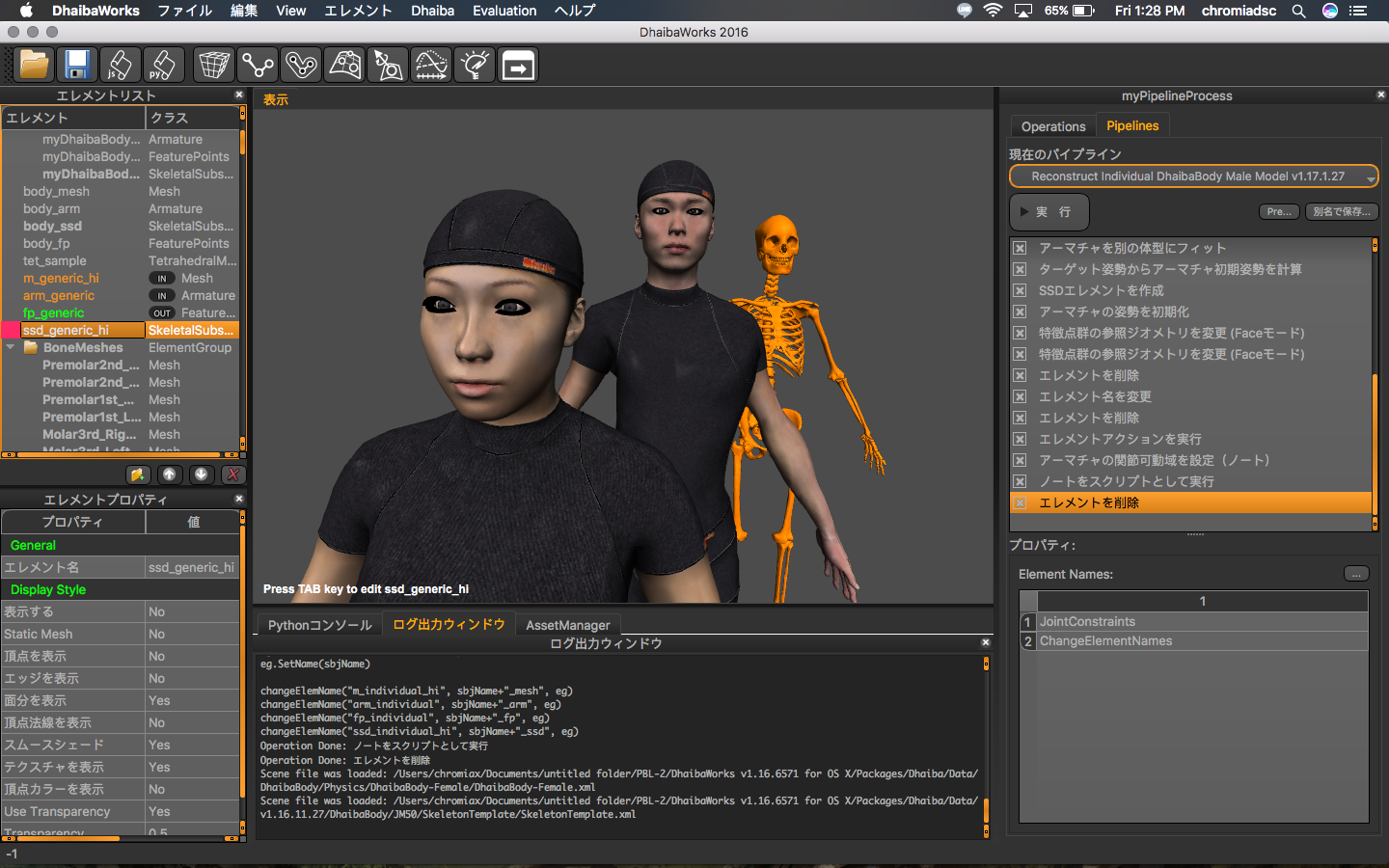

As a basis for the visualization systems, we develop real-time algorithms and methods for gridding, volume rendering, 3D segmentation, mesh reconstruction, and morphing, which are specifics for human-oriented modeling. We conduct body shape modeling using Digital Human manikin models and 3D full-body scan data. Modeling of body shapes can be done for the scenarios of weight gain/loss, muscularity gain, and effects of ageing, which are important for healthcare and beauty services.

As a basis for the visualization systems, we develop real-time algorithms and methods for gridding, volume rendering, 3D segmentation, mesh reconstruction, and morphing, which are specifics for human-oriented modeling. We conduct body shape modeling using Digital Human manikin models and 3D full-body scan data. Modeling of body shapes can be done for the scenarios of weight gain/loss, muscularity gain, and effects of ageing, which are important for healthcare and beauty services.

Combining with motion capture (MoCap) data, 3D scans are used to estimate dynamic parameters, necessary to realistically model human movements. Digital Hand models are used for ergonomics factors estimation, as such as grasp quality and stability.

We pay attention to visualization systems with haptic devices for VR surgery and VR nursing. Digital Human modeling is driven by real-time sensory and feedback devices (trackers, accelerometers, haptics), and utilizes experimentally collected data bases (3D scans, CT/MRI data). We also conduct experimental processing of data collected from haptic devices to model and predict human hand movement in constraint dynamic environments and to study human balancing and reaction skills.

Combining with motion capture (MoCap) data, 3D scans are used to estimate dynamic parameters, necessary to realistically model human movements. Digital Hand models are used for ergonomics factors estimation, as such as grasp quality and stability.

We pay attention to visualization systems with haptic devices for VR surgery and VR nursing. Digital Human modeling is driven by real-time sensory and feedback devices (trackers, accelerometers, haptics), and utilizes experimentally collected data bases (3D scans, CT/MRI data). We also conduct experimental processing of data collected from haptic devices to model and predict human hand movement in constraint dynamic environments and to study human balancing and reaction skills.

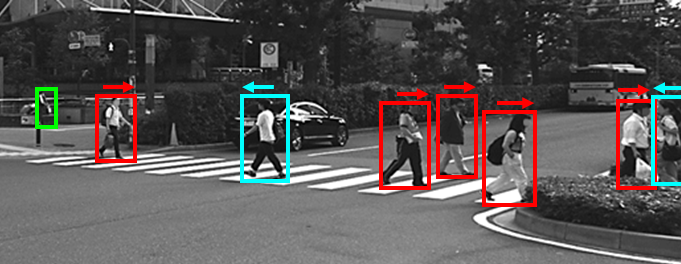

Autonomous driving is one of the promising technologies that can enhance safety and mobility. In the near future, self-driving vehicles will inevitably coexist with human-driving vehicles. To harmoniously share traffic resources, self-driving vehicles have to learn behavioral customs from human drivers. Incorporating human-driver traits into how autonomous vehicles drive is a significant topic. This research will build a machine-learning based model to learn the human driver’s perception and decision-making ability in the complex and crowded urban city.

Autonomous driving is one of the promising technologies that can enhance safety and mobility. In the near future, self-driving vehicles will inevitably coexist with human-driving vehicles. To harmoniously share traffic resources, self-driving vehicles have to learn behavioral customs from human drivers. Incorporating human-driver traits into how autonomous vehicles drive is a significant topic. This research will build a machine-learning based model to learn the human driver’s perception and decision-making ability in the complex and crowded urban city.

The Augmented Reality (AR) navigation system in smart glasses could provide a new experience for pedestrians compared to the conventional navigation in the smartphone. An accurate positioning technology is fundamental to a satisfied AR based navigation service. However, Global Navigation Satellite System (GNSS), Pedestrian Dead Reckoning (PDR) and Wi-Fi, those positioning methods all cannot have a satisfied performance in either indoor or outdoor of the urban city environment. This research will leverage camera sensing ability and the rich information in open map sources to improve the positioning accuracy. Moreover, the navigation information will be visualized by integrating with the real scene based on the online and real-time object detection and matching technology.

The Augmented Reality (AR) navigation system in smart glasses could provide a new experience for pedestrians compared to the conventional navigation in the smartphone. An accurate positioning technology is fundamental to a satisfied AR based navigation service. However, Global Navigation Satellite System (GNSS), Pedestrian Dead Reckoning (PDR) and Wi-Fi, those positioning methods all cannot have a satisfied performance in either indoor or outdoor of the urban city environment. This research will leverage camera sensing ability and the rich information in open map sources to improve the positioning accuracy. Moreover, the navigation information will be visualized by integrating with the real scene based on the online and real-time object detection and matching technology.